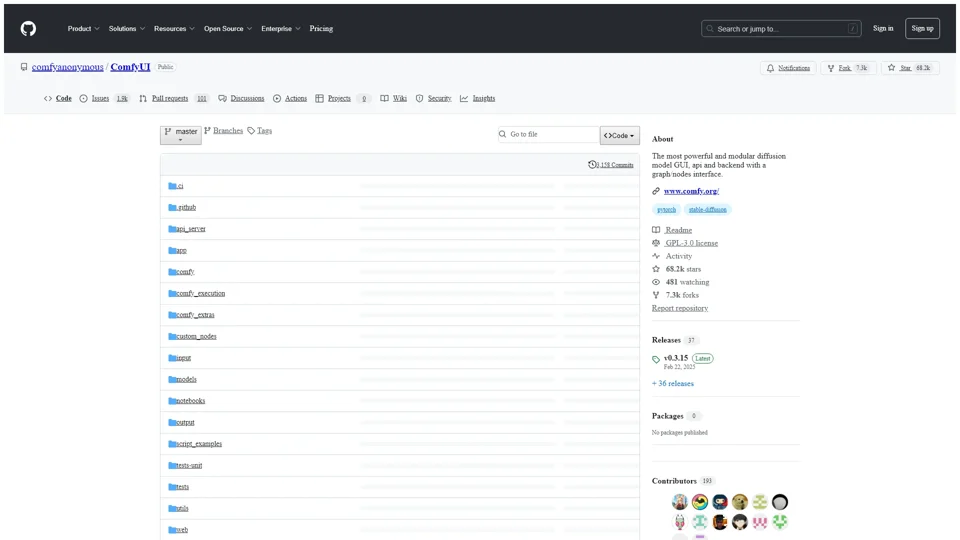

What is ComfyUI?

ComfyUI is an advanced and modular diffusion model GUI, API, and backend designed with a graph/nodes interface. It allows users to design and execute complex Stable Diffusion pipelines without needing to code. This powerful tool supports various models for image, video, and audio generation, offering extensive features like asynchronous queue systems, smart memory management, and support for multiple GPU configurations.

Main Features of ComfyUI

Nodes/Graph Interface

- Complex Workflows: Create intricate workflows using a visual nodes/graph interface.

- No Coding Required: Experiment with advanced Stable Diffusion pipelines without writing any code.

Model Support

- Image Models: Supports SD1.x, SD2.x, SDXL, SDXL Turbo, Stable Cascade, SD3, SD3.5, Pixart Alpha, Sigma, AuraFlow, HunyuanDiT, Flux, Lumina Image 2.0.

- Video Models: Includes Stable Video Diffusion, Mochi, LTX-Video, Hunyuan Video, Nvidia Cosmos.

- Stable Audio: Supports audio generation models.

Advanced Features

- Asynchronous Queue System: Efficiently manage multiple tasks.

- Optimizations: Re-executes only the parts of the workflow that change between executions.

- Smart Memory Management: Automatically runs models on GPUs with as low as 1GB VRAM.

- CPU Fallback: Works even without a GPU.

- Model Formats: Loads ckpt, safetensors, diffusers models/checkpoints, standalone VAEs, CLIP models.

- Embeddings/Textual Inversion: Supports embeddings and textual inversion.

- Loras: Supports regular, locon, and loha Loras.

- Hypernetworks: Integrates hypernetworks for enhanced capabilities.

- Workflow Management: Load/save workflows from generated PNG, WebP, FLAC files; save/load as JSON.

- Area Composition and Inpainting: Supports inpainting with both regular and inpainting models.

- ControlNet and T2I-Adapter: Enhances control over image generation.

- Upscale Models: Includes ESRGAN, SwinIR, Swin2SR, etc.

- unCLIP Models: Supports unCLIP models.

- GLIGEN: Integrates GLIGEN for advanced text-to-image generation.

- Model Merging: Allows merging of models.

- Latent Previews: Provides latent previews with TAESD.

How to Use ComfyUI

Installation

- Windows: Download the portable standalone build from the releases page. Extract with 7-Zip and run. Place your Stable Diffusion checkpoints/models in

ComfyUI\models\checkpoints. - Jupyter Notebook: Use the provided Jupyter Notebook for services like Paperspace, Kaggle, or Colab.

- Manual Install (Windows, Linux): Git clone the repo, place your models in respective directories, and install dependencies using

pip install -r requirements.txt.

Running ComfyUI

- Execute

python main.pyto launch the application.

Helpful Tips

- Shortcuts: Utilize keybinds for efficient navigation and operations within the interface.

- Troubleshooting: For issues like "Torch not compiled with CUDA enabled," uninstall and reinstall torch using the appropriate command.

- Dependencies: Ensure all dependencies are installed correctly by running

pip install -r requirements.txt.

Frequently Asked Questions

Q: Can I share models between another UI and ComfyUI?

A: Yes, use the config file (extra_model_paths.yaml) to set search paths for models. Rename this file and edit it with your preferred text editor.

Q: What if I encounter the "Torch not compiled with CUDA enabled" error?

A: Uninstall torch using pip uninstall torch and reinstall it with the correct command for your GPU setup.

Q: Is ComfyUI compatible with AMD GPUs?

A: Yes, AMD users can install ROCm and PyTorch using pip commands tailored for their specific GPU architecture.

Q: Can ComfyUI run on Apple Mac silicon?

A: Yes, follow the manual installation instructions for Windows and Linux, ensuring you have the latest pytorch nightly installed.

Q: How do I enable experimental memory-efficient attention on RDNA3 GPUs?

A: Use the command TORCH_ROCM_AOTRITON_ENABLE_EXPERIMENTAL=1 python main.py --use-pytorch-cross-attention.

Pricing

ComfyUI is open-source and free to use. However, donations and sponsorships are welcome to support ongoing development and maintenance.

Keywords

ComfyUI, Stable Diffusion, GUI, API, Backend, Graph/Nodes Interface, Workflow, Image Generation, Video Generation, Audio Generation, Asynchronous Queue, Smart Memory Management, CPU Fallback, Model Formats, Embeddings, Textual Inversion, Loras, Hypernetworks, Workflow Management, Area Composition, Inpainting, ControlNet, T2I-Adapter, Upscale Models, unCLIP Models, GLIGEN, Model Merging, Latent Previews, Shortcuts, Troubleshooting, Dependencies, AMD GPUs, Apple Mac Silicon, Experimental Memory-Efficient Attention, Pricing